streamtasks

Demos

- Overview

- Interactive voice chat with ASR

- standalone ASR

- llama.cpp chatbot

- playing sound effects

- llama.cpp + tts

- video layout and video viewer

- audio switching

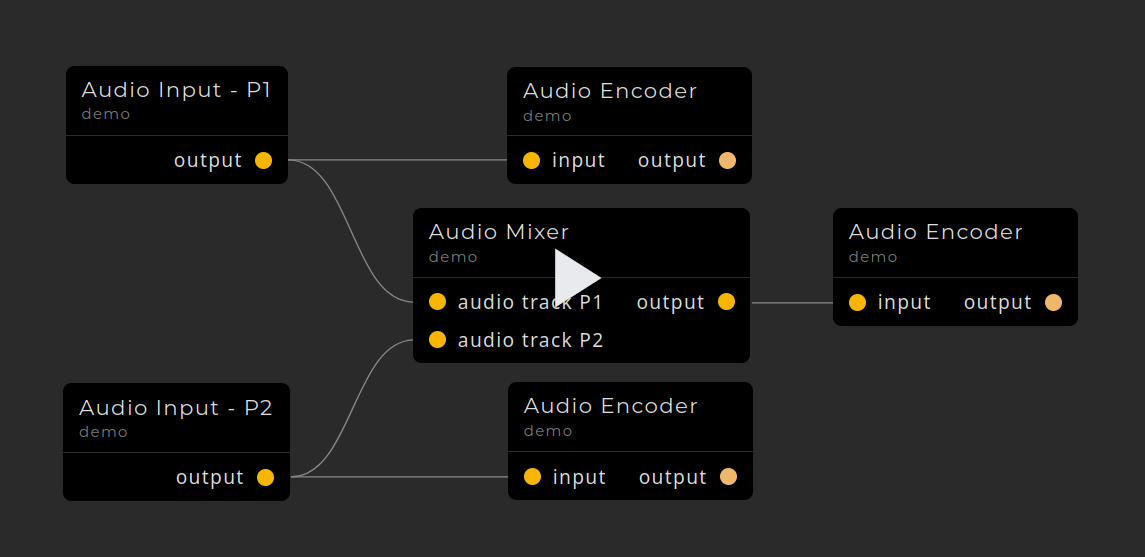

- audio mixing and scaling

- publishing a livestream

- ollama chat (custom task)

Overview

Streamtasks aims to simplify software integration for data pipelines.

How it works

Streamtasks is built on an internal network that distributes messages. The network is host agnostic. It uses the same network to communicate with services running in the same process as it does to communicate with services on a remote machine.

Getting started

Installation

Simple

Windows (with an MSI)

Go to the latest Release on Github and Download the .msi file. Once downloaded, run it.

Or watch the demo.

Linux (with flatpak)

Go to the latest Release on Github and Download the .flatpak file. If you have a GUI to manage flatpak packages installed, you can just double click the file. Otherwise run flatpak install <the downloaded filename>.

Or watch the demo.

With pip

pip install streamtasks[media,inference] # see pyproject.toml for more optional packages

Hardware encoders and decoders

To use hardware encoders and decoders you must have ffmpeg installed on your system. Verify that you system installation of ffmpeg has the hardware encoders/decoder with:

# list decoders

ffmpeg -decoders

# list encoders

ffmpeg -encoders

Install streamtasks without av binaries.

pip install streamtasks[media,inference] --no-binary av

If you have already installed streamtasks (and av), you can reinstall av with:

pip install av --no-binary av --ignore-installed

See the pyav documentation for more information.

llama.cpp with GPU

To install llama.cpp with GPU support you can either install streamtasks with:

CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install streamtasks[media,inference]

or you can reinstall llama-cpp-python with:

CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install llama-cpp-python --ignore-installed

See the llama-cpp-python documentation for more information.

Running an instance

You can run an instance of the streamtasks system with streamtasks -C or python -m streamtasks -C.

The flag -C indicates that the core components should be started as well.

Use streamtasks --help for more options.

Connecting two instances (demo)

When connecting two instances you need to have one main instance running the core components (using -C).

To create a connection endpoint (server), you can use the Connection Manager in the UI or you can specify a url to host a server on as a command line flag.

For example:

streamtasks -C --serve tcp://127.0.0.1:9002

You may specify multiple serve urls.

To connect the second system to the main system, you need to start your second system without the core components, specifying a connect url.

For example:

streamtasks --connect tcp://127.0.0.1:9002

See connection for more information.